April

20

2017

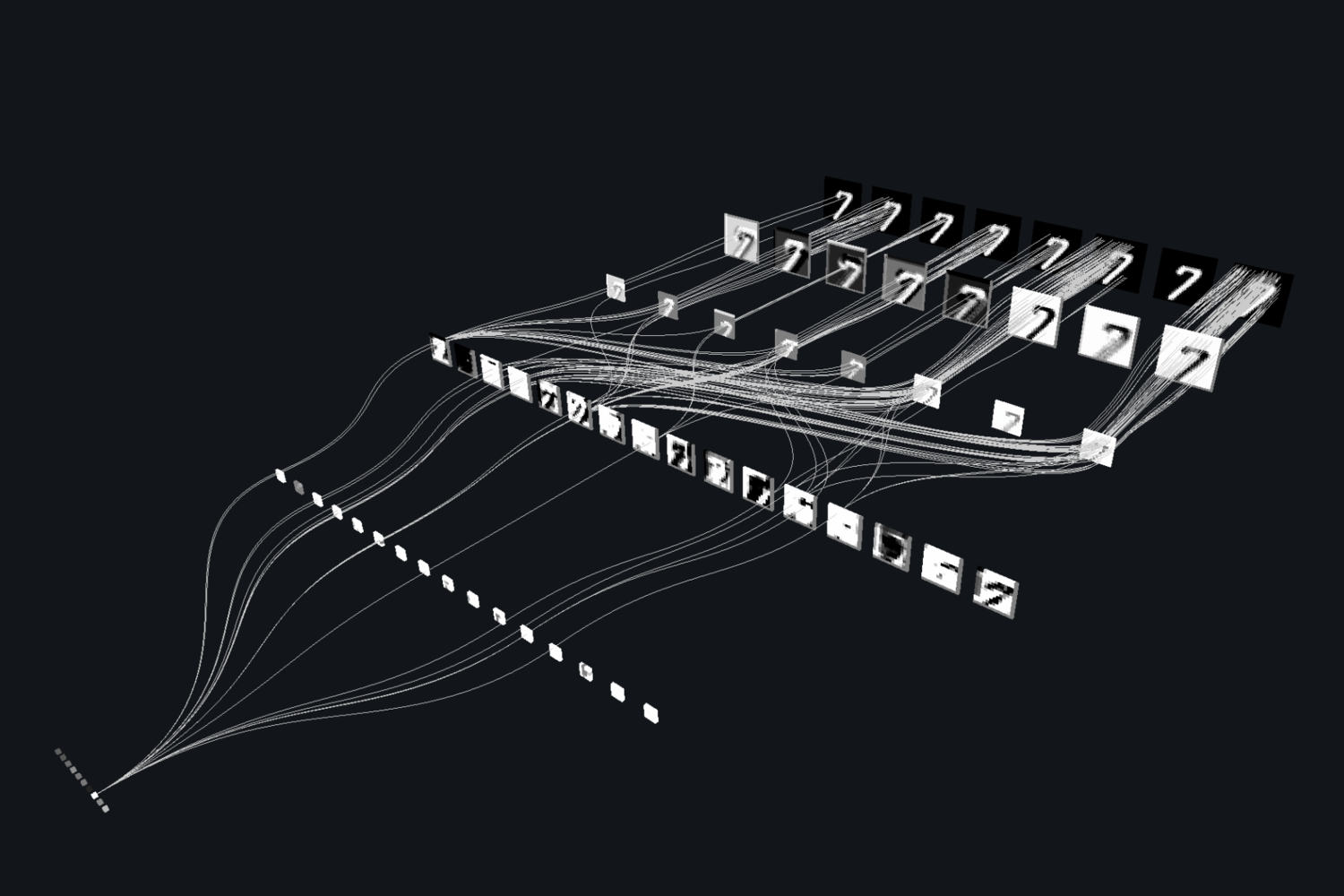

Deep Recurrent Dialogue GenerationIn the conference paper "Deep Reinforcement Learning with Hierarchical Recurrent Encoder-Decoder for Conversation", we trained an open domain dialogue agent to carry out an “engaging conversation” marked by topic coherent responses to the speaker’s queries, followed by open ended questions to prolong the conversation. The dialogue agent was trained end-to-end where a hierarchical recurrent neural net learned the grammar model, and small talk etiquette was learned over time using deep reinforcement learning with handcrafted reward functions.

In the conference paper "Deep Reinforcement Learning with Hierarchical Recurrent Encoder-Decoder for Conversation", we trained an open domain dialogue agent to carry out an “engaging conversation” marked by topic coherent responses to the speaker’s queries, followed by open ended questions to prolong the conversation. The dialogue agent was trained end-to-end where a hierarchical recurrent neural net learned the grammar model, and small talk etiquette was learned over time using deep reinforcement learning with handcrafted reward functions.